SELECT LEFT(physical_name, 1) as Drive,

SUM(size)*8/1024 as SizeMb

FROM sys.master_files

GROUP BY LEFT(physical_name, 1)

ORDER BY Drive;

And then I compare the numbers I got back against what Windows says. Hmm, odd. SQL says it’s using up 296,869 Mb (that’s ~289 Gb) of space on U:\, but when I go to Start > Computer it says it’s using up 390Gb of space on U:\.

Rogue files! Rogue files on my data drive! I now have to HUNT DOWN AND PUNISH those 101 Gb of files freeloading on my data drive. And oh, how they’ll pay….

First, Gather Data and Compare

Step 1: Get the physical_name of all files on that drive from sys.master_files. That’s easy: SELECT physical_name FROM sys.master_files WHERE physical_name like ‘U%’;

Step 2: Open powershell, navigat to U:\, and run get-childitem -name -recurse -filter *.*

Step 3: Compare the lists. You can, of course, import to SQL and compare that way, or just paste each list into Excel for a look-see.

Sure enough, there were about 100Gb of duplicate data files – MDFs, NDFs, and LDFs, if you can believe it! – on that server that hadn’t been touched in years. Time for vengance…

Delete Files, if you Dare

There are a number of ways we could go about removing these files, of course. In my case, it so happens that all my current, supposed-to-be-there files have recent modification dates, and everything else is pre-2012. That makes it very easy!

Let’s start with a test script, that will just list the things we want to delete:

get-childitem “U:\SQL03\” | where {$_.lastwritetime -lt (get-date).adddays(-365) } |% {write-output $_.fullname}

Explanation:

- get-childitem “U:\SQL03\” | Get all objects in the specified directory, and pipe them to the next operation.

- where {$_.lastwritetime -lt (get-date).adddays(-365) } | Test each item’s “last write time” date against today -365 days; if they’re earlier than that date, pipe them to the next operation.

- % {write-output $_.fullname} Take each item and output its name.

Awesome. All we have to do to change this into a dangerous, file-destroying script*is to replace write-output with remove-item.

Wait, I could use this to routinely clean up old backups too, right?

Funny you should ask! Yes.

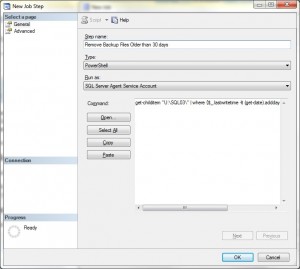

Let’s say you want to delete backup files in a dedicated folder that are older than N days, and repeat regularly. Just take this and schedule it in a SQL Agent job! (You might want to change the -365 to -30, or whatever your given retention period is, in days.)

Yeah, but my SQL backups go to a UNC share 🙁

Except that your backups aren’t on a local drive; they’re on a UNC share, and you’re getting a weird error. No problemo!

Your new, file-destroying script* that will indeed delete all files older than 30 days now looks like this:

cd “\\Server01\SQLShare”; get-childitem “\\Server01\SQLShare \SQL03\FULL” | where {$_.lastwritetime -lt (get-date).adddays(-30) } |% {remove-item $_.fullname}

Let’s make this slightly safer, and only delete the *.bak files older than 30 days:

cd “\\Server01\SQLShare”; get-childitem “\\Server01\SQLShare \SQL03\FULL” -filter *.bak | where {$_.lastwritetime -lt (get-date).adddays(-30) } |% {remove-item $_.fullname}

There we go, wielding Powershell like Foe-hammer in a goblin cave**. Feels good, don’t it?

Happy days,

Jen McCown

http://www.MidnightDBA.com/Jen

*Note that the sys.master_files “size” column isn’t in Mb, but in pages. To translate pages to Mb, SELECT name, (size*8)/1024 as SizeInMb from sys.master_files.

*Which you will test thorougly before you use in a production environment, So Help You Codd.

** Too nerdy?

Inspirational code source: Powershell delete files in subfolder older than 30 days with out deleting subfolders