I’ll show you the way I do it though and you can either adopt it or not. Frankly, I’ll be really interested in seeing if anyone leaves a comment with a better way, cause I just stumbled on this about a yr ago so I don’t know how it’s usually done.

Anyway, we’re going to turn to Powershell for the answer… there’s a shock, huh?

Here’s the simple code it takes to query for a VM instance:

gwmi Win32_BIOS -ComputerName "Server1"

That’s pretty easy, right?

Now for the results. I’ve only got 2 VM packages to test with so if you’ve got something other than VMWare or Hyper-V then you’ll have to devise your own test.

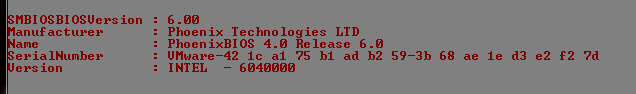

For VMWare, the results will look like this:

Notice the SerialNumber has “VMware” in it? That’s what you’re looking for.

Now, to filter out the boxes in your LAN that are VMs, you have but to filter based on that criteria. It would look something like this:

$a = "Server1", "Server2", "Server3"

$a | %{$bios = gwmi Win32_BIOS -ComputerName $_; If($bios.SerialNumber -match "VMware") {"$_ is Virtual"}

Ok, so it’s not a tremendously useful example, but it shows you how the simple filter would work.

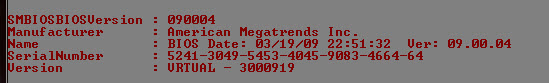

Now let’s move on to Hyper-V. That output would look like this:

This time notice that it doesn’t say “Hyper-V”, and it doesn’t even say anything special in the SerialNumber. This time you have to look in the Version col. This col has “VRTUAL” in it. It would be nice if it said Hyper-V, but it doesn’t. So now you can apply the same filter above to find your VMs if you’re in a Hyper-V shop.

$a = "Server1", "Server2", "Server3"

$a | %{$bios = gwmi Win32_BIOS -ComputerName $_; If($bios.SerialNumber -match "VRTUAL") {"$_ is Virtual"}

Now, let’s put them together. If you’re in a mixed shop like I am, you’ll want to know what kind of VM your SQL box is in so you know which VM admin you need to work with when you have problems. So this last script will tell you which VM your boxes are in. Now you can query all of your servers and put that info into a table so you have it always. And don’t forget to run it periodically to make sure nothing’s changed. Because we’re always moving boxes into VMs, and even taking a few back out. So keeping up with the latest can be useful. I run mine once a week.

$a = "Server1", "Server2", "Server3"

$a | %{

$bios = gwmi Win32_BIOS -ComputerName $_;

if($bios.Version -match "VRTUAL") {"$_ is Hyper-V"}

if($bios.SerialNumber -match "VMware") {"$_ is VMWare"}

if($bios.Version -notmatch "VRTUAL" -and $bios.SerialNumber -notmatch "VMware") {"$_ is Physical"}

}

I would loved to have used the SWITCH statement here, but since I’m having to query 2 separate cols it wouldn’t have been the easiest way to do it. However, just in case you get a chance to use it, here’s a quick lesson on SWITCH. It’s like the CASE statement you see in pretty much every language out there. I won’t bother with explaining the details of the syntax because I think it’s fairly self-explanatory. If you do need help though, ping me and I’ll give you a hand, or you could do the responsible thing and bing it on google first.

But here’s a quick example of how you’d use SWITCH.

$a = "hello"

switch ($a)

{

"hello" {"You typed the right word."}

default {"You typed something other than 'hello'."}

}

}

Again, it’s not a particularly useful example, but it does show you how SWITCH works. It’s really just a CASE statement.