One of the biggest battles I fight when I teach PS or show it to different companies is adoption. DBAs and managers just don’t get why they should be using PS to manage their environments. And the reason so many of them are slow to adopt is because the right message is very hard to come by. In fact, I see the wrong message all the time. This was driven home again just now as I received my “SQL Server Pro UPDATE” email (read it here). The editorial was about why DBAs should care about PS. It was written by Michael Otey (pronounced Otee) and I’m sorry to say that as the Sr. Technical Director he’s completely missed the mark. He’s managed to relegate the most important management tool DBAs have to a mere sideline player that allows you to combine SQL work with OS-level work. Dude, you’ve completely missed the boat!!!

I’ve said this a number of times and I’ll say it again here. Let’s get the right message out about PS. Let’s start telling everyone that truth about it and that truth is that you’re going to get left behind if you don’t know PS and you’re not going to be able to provide true value to your company.

I’m a working production DBA in a very large shop. And I can tell you with absolute authority that PS is more than just a way to give you some OS-level functionality in your SQL processes. PS is vital to the management of my shop. The primary role of PS is to allow you to manage hundreds of objects at once with just a few lines of code. As you’ve seen in my videos again and again, and in my classes again and again, you just can’t manage any decently sized environment by hand. It’s just too much and it’s accident-prone.

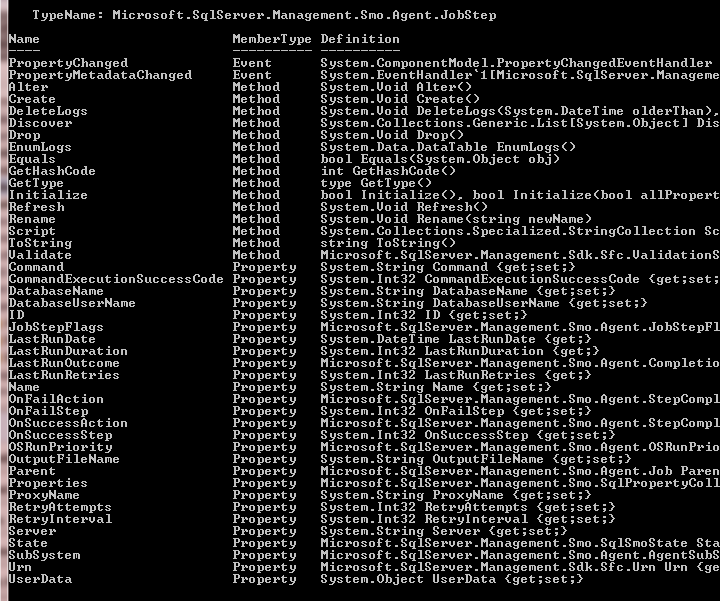

Now, a large environment doesn’t have to mean hundreds of servers. Oh no, it can mean dozens of DBs on a single server. It can mean dozens of schemas in a single DB, or dozens of tables, SPs, views, etc. Or it can even mean dozens of jobs or logins. It doesn’t matter what you’re cycling through. The point is that PS is much more efficient at managing these objects than T-SQL will ever be. There may be a built-in SP for running a T-SQL statement against all the DBs on your server, and there’s even one for running something on all the tables I believe. But there’s nothing for all the jobs, or SPs, or views, or logins, or credentials, or any of the other many objects you can manage natively with PS. With just a few characters of code you can manage all the tables in your DB. And with just a few more characters you can do that same operation against all the DBs on your server. And with yet just a few more characters of code, you can do that same operation against all the DBs across as many servers as you like. THAT’S THE POWER OF POWERSHELL. It’s more than just a way to manage files while you’re doing some T-SQL operations.

A perfect example is adding user accounts to DBs on several boxes. We quite often get contractors who come in and do specific work for us and they need to be given rights to all of the environments for a given application. So this one we have has 500+ DBs on each server. There are 7 prod servers, 3 QA servers, and 5 dev boxes. All of them have 500+ DBs on them. So that’s 15 servers, and at least 7500 DBs that I have to add these users to. And using windows groups will only take you so far because when the next contractor comes in he may need different rights so you still find yourself modifying the perms regardless. The point is I go through this exercise about once every 2mos and PS has made it a trivial matter. And that’s just ONE example.

The time has come to stop downplaying the role of Powershell in SQL Server. DBAs need to be running to it. We’re being asked to do more and more with less time and fewer resources. And there are too many courses being taught, too many SQLSaturday sessions, too many videos (FREE on MidnightDBA.com) for you to remain in the dark any longer. I’ve managed 900+ servers by myself using mainly PS. I’d say it goes quite a bit deeper than being a helper to keep you from using xp_cmdshell.