This is a followup to my previous post here.

Wow, what a day. Ok, so I just got off the phone with the tech lead for that company. It was enlightening to say the least. It was really a longer call than I expected or that I even wanted, but my blood pressure’s gone down a bit now. I obviously can’t recount the entire conversation for you, but here are the highlights:

V = Vendor

M = Me

V: Hi Sean, this is Josh(not his real name) with X and I was told you wanted to talk to me.

M: No, the last thing in the world I wanna do is talk to you, but I feel in order to get our issue resolved I have no choice.

V: Yeah, Cornhole(not his real name) told me you got upset with him when he was just trying to explain to you what your issue was and then you insisted on speaking with me.

M: This is just incredible. No I didn’t get upset with him for explaining the issue to me. I got upset with him when I told him his explanation was flatout wrong and then he called me an idiot. He didn’t even hint at it. He called me an idiot outright.

V: Really? Cause that’s not the story I got at all. I heard that he was calmly explaining that your backups were the issue and you just exploded on him.

M: No, that’s NOT what happened at all. That little turd actually called me stupid for not buying his assinine explanation for our issue and then I only exploded after he did that a couple times.

…

V: Well what don’t you believe about it then? Because we’ve seen that many times where backups will cause proceses to block.

M: No you haven’t. Listen, I realize that everyone can’t spend their days studying SQL, but one simple fact is that backups don’t block processes. Period. I’ve been doing nothing but DBs in SQL Server for over 15yrs against TBs of data and nowhere have I ever seen a backup block a process. The problem is that you’ve got like 3 tables with TABLOCK in that sp and that’s what causing your blocking. Don’t you think it’s funny that it only clears up AFTER you kill that process?

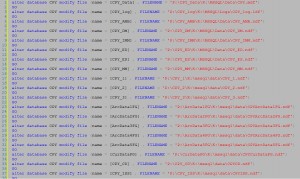

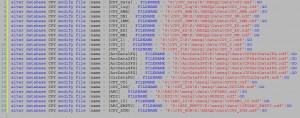

V: Where did you get the code? Those sps are encrypted.

M: Encryption only stops amateurs. And while we’re at it, what’s this script I hear about that you’ve got to unconfuse SQL to give you the real spid for the process?

V: Where did you hear that backups don’t block processes? It’s well-known here that it’s one of our biggest problems.

M: No, your biggest problem is your TABLOCKS. And I heard it from the truth.

V: What is a tablock?

M: A table-level lock. You’re locking the entire table just to read data.

V: I don’t know about that, I’ve never seen the code because it’s encrypted.

M: Well I do and that’s your problem. Now what about this script I kept hearing about from Cornhole?

…

M: Did you get my email?

V: Yeah.

M: And you can see the place in the code where you’re locking the whole table?

V: Yeah.

M: So how is it that you lock the entire table and you claim that it’s the backup blocking everything? Haven’t you noticed that the backup only decides to block stuff when this process is running?

V: That does sound a little suspicious when you put it that way. What can we do?

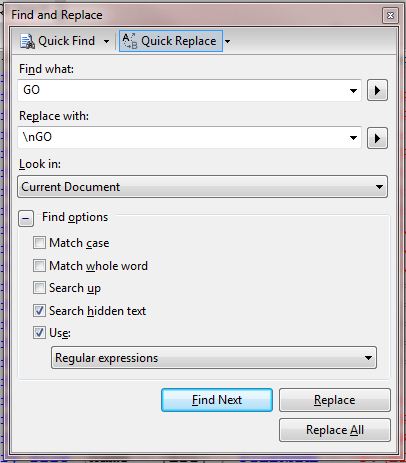

M: Let me take those TABLOCKs out of there so I can query these tables without locking everything up.

V: I just saw your email signature and I’ve never heard of an MVP before, but I just looked it up. Are you really one of those guys?

M: Yeah.

V: Wow, so I guess you really know what you’re doing then.

M: I’ve hung a few DBs in my day, sure.

V: Do you mind if I write you again if I have any questions?

M: I suppose that depends on whether you’ll let me change that stupid sp or not.

V: Yeah, go ahead. I’ll have to have a talk with our devs tomorrow and try to convince them to put this in our next build. Honestly, I’m only a tech lead here because I’ve been here a few months longer than anyone else on the support team. We get all this stuff from the devs and they won’t tell us anything.

M: So what about this script I keep hearing about. The one that tells you how to unconfuse SQL and give you the right spid for a process?

V: That’s a script the devs have and they won’t show it to me either. I just tell my guys something else so they won’t know I haven’t seen it.

M: Wow, that sucks man. You do know though that a script like that doesn’t exist, right? It’s completely bullshit.

V: Yeah, I hate this place. I’ve been suspecting for a while now they they were lying to me, but what can I do? I need this job. I’m just lucky to be working. I have no idea what I’m doing here.

M: I don’t know what to say to that. You’re right, you are lucky to be working these days. But pick up a couple books on your own and learn SQL. Don’t rely on them. There are websites, blogs, etc. I myself run a site.

…

So in short, I really started to feel for this guy. He ended the call apologizing 100x for the grief they’ve caused me and he’s going to go out right away and start watching my vids and trying to learn more. He asked if he could write me with questions, and I said, of course dude, anytime. That’s what I do.

And y, he sounded about 22 as well. Turns out I was right. I asked and he was 21 and this was only his 2nd job and they brought him in from nothing and “trained” him. I’d say he’s got some work to do. But I personally consider this exchange a success.