As long as I’m on a roll here with server-level ops, I thought I’d go ahead and cover dropping DBs in PS. This should be a fairly short post as there’s not much to this, but it’s worth talking about anyway.

Dropping DBs isn’t any big deal in T-SQL:

DROP DATABASE SeansDB

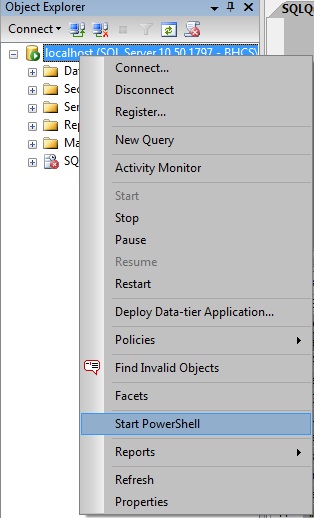

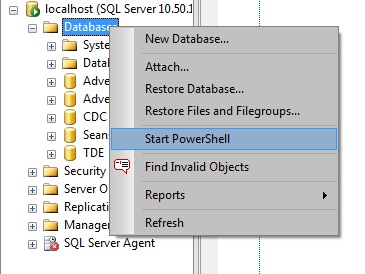

And the PS method for doing this is of course a 1-liner, but it’s not as easy as T-SQL. Again there’s more than one method for doing it and I’ll show you both of them. Here, whether you connect through sqlps, or through PS proper, you want to be at the Database node. Here’s a screenshot of doing it from sqlps, but again, it really doesn’t matter.

Method 1

dir | %{$_.Drop()

There are a couple things to note here. First of all, this is typical PS, so if you run this cmd as it’s written here, you’ll drop every DB on your server… totally uncool. And 2nd, I’m always talking about how if you see an Alter() method on the get-member list then that usually means it wants you to use it before the changes are pushed to the server itself. Well, this is one of those times that makes me put the ‘usually’ in there because while that’s a good rule of thumb, PS is nice enough to drop objects when you ask it to. So anyway, unless you want to lose everything, I wouldn’t run the code above. I just wanted to have a basis for future syntax.

So all we have to do now is limit our result list to the DB we’re interested in:

dir | ?{$_.Name -eq "SeansDB"} | %{$_.Drop()

It just doesn’t get any easier than that. Now, at this point T-SQL is still ahead, cause even I would still much rather use T-SQL for dropping a single DB. Powershell is going to pull ahead pretty fast when it comes to dropping several DBs, or even a single DB on multiple servers.

Let’s say you’ve got several test DBs, and they all have the word ‘test’ in them somewhere. Since different devs created them, they don’t have a solid naming convention. Hell, some of them may even just have ‘tst’ in them, who knows, right?

At this point it’s just a matter of altering the above script so that it can accommodate your criteria.

dir | ?{$_.Name -match "test" -or $_.Name -match "tst"} | %{$_.Drop()

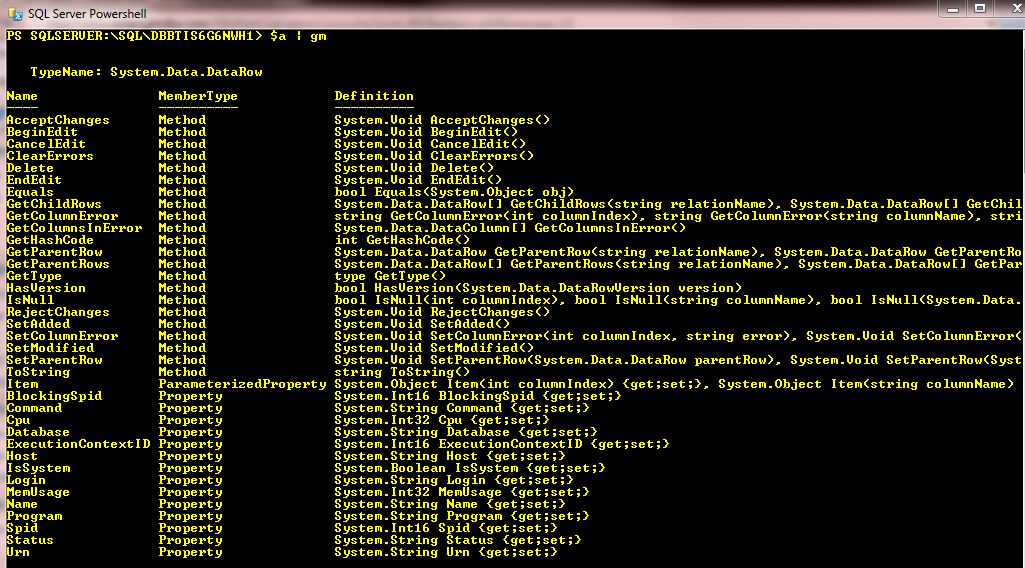

T-SQL would require you to code a cursor for this, and while the for-each is technically a cursor in PS, it takes next to no coding for us. PS is starting to pull ahead a little now. And by simply changing the where-object criteria, you can easily change this script to do things that are more difficult in T-SQL like dropping DBs that are all owned by a certain login, or were created on a certain date, or are under or over a certain size, or even haven’t been backed up recently. Of course, some of that criteria you’d never use, but it’s all there for you. And again, you can find out what you can use by doing a get-member and anything that comes up as a property is usable.

dir | gm}

Method 2

Now let’s look at a shorter method for dropping a single DB.

(dir SeansDB).Drop()}

That’s pretty easy, and to do multiple DBs, it could look something like this:

(dir | ?{$_.Name -match "test" -or $_.Name -match "tst").Drop()

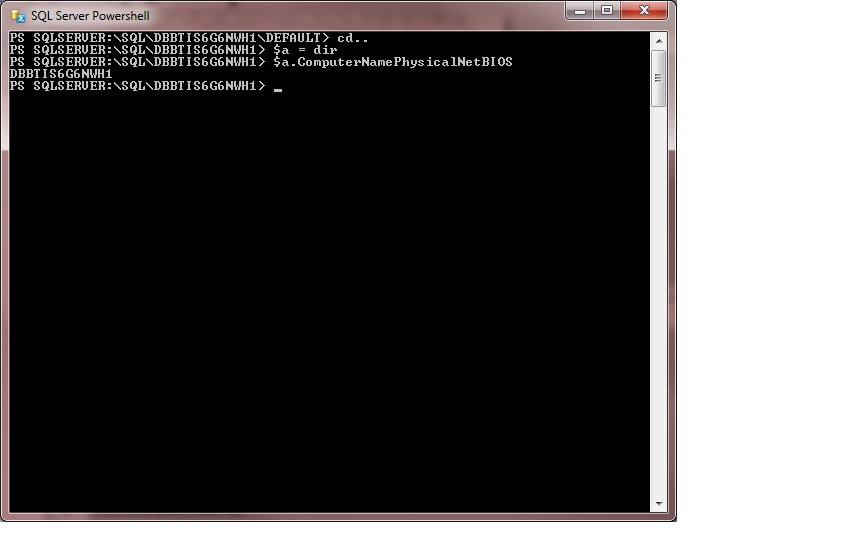

Now, if you have a DB that exists across multiple boxes then you can drop all of them like this:

$a = "server1", "server2", "server3"

$a | %{

$ServerName = $_; ## Just setting this to make the script easier to read.

cd sqlserver:\sql\$ServerName

(dir SeansDB).Drop()}

And that’s it. Going against multiple boxes is so easy. And the way I’m doing it by setting the $a var to the server list, you can easily populate $a anyway you like and you don’t have to change the rest of the script. So make it the results from a SQL query, or from a txt file, etc. It doesn’t matter.

OK, so that’s it this time. Be careful with this or you’ll find yourself doing a recovery on all your DBs. Man, it would be really easy to be evil with this huh? In less than 60secs you could kill every DB in your company if you wanted to… say if they fired you for no reason and you wanted to get back at them. I’m not saying you should, I’m just saying it’s possible.

And after something like that I’m compelled to say (again) that I’m not responsible for your (mis)use of any of these methods.