One of the places PS shines is in its ability to let you work the way you want. So often I find someone stagnating in PS because they can’t figure out with “proper” way to do the task. So they stare at the screen for along time, try a few things, and finally give up. The problem with that is that all of a sudden PS is this new thing they don’t understand and they don’t have time to learn it nuances well enough to make it useful. So they get discouraged and don’t even consider it when coming up with solutions to issues. And this is coupled with that fact that sooooooo much of the PS examples out there are by guys who just don’t get it. They post these really long scripts that are written in native SMO when a simple 1-liner will do. Why would you do that to someone? I’ve said many times that if those scripts had been my first intro to PS I wouldn’t have given it a second glance either. To me, these guys can definitely code, but they really don’t *get* what PS is all about.

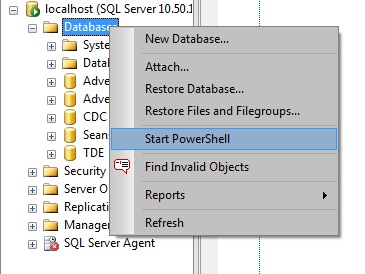

So here I’m going to help you with what I’m calling the conduit method of learning PS. Because even now when I can’t figure out how to do something in “proper” PS, I still fallback on what I know. I’ve said many times in my SQLSaturday sessions that PS will do pretty much whatever you tell it, and that it’s perfectly compatible with regular DOS commands. And the same thing goes with T-SQL.

Yesterday I needed to take a user account and add it to every DB on a big server. This server had hundreds of DBs on it. Well, of course I went to PS. My goal was to perform the task as fast as I could and go on about my day, so I chose the conduit method instead of the native PS method. Sure, I can call the regular SMO methods and maybe even figure out how that’s done, and figure out the exact params the methods take, etc, but SMO is so poorly documented it’s hard to ferret some of that stuff out. And you know what? Sometimes it’s more important to just get the job done than it is to make sure it’s done in “proper” or native PS.

So what is this conduit method I’m talking about? Well, it’s marrying what you know in T-SQL and using PS only as a conduit for your T-SQL. This usually consists of using Invoke-SqlCmd inside a loop. This way you get the best of both worlds. You get the power of PS and the simplicity of what you already know and you don’t have to rewrite it every time. So again, the conduit method let’s you code in T-SQL, and just delivers it in PS. And it’s a very powerful technique.

OK, so adding a user account to all my DBs. Here’s what the script looked like:

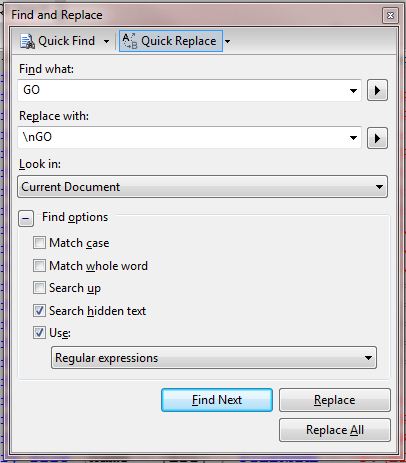

dir | %{invoke-sqlcmd -ServerInstance localhost -database $_ -query "CREATE USER [MyUser] FOR LOGIN [MyUser]"}

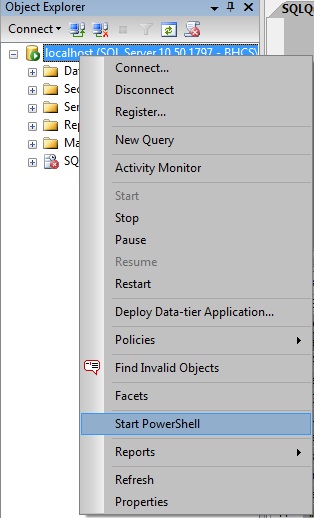

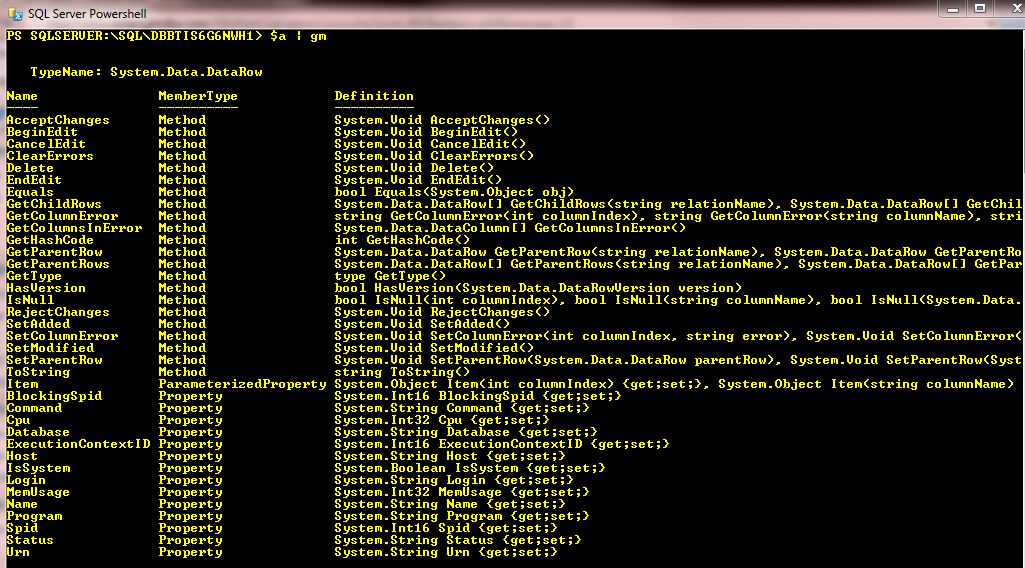

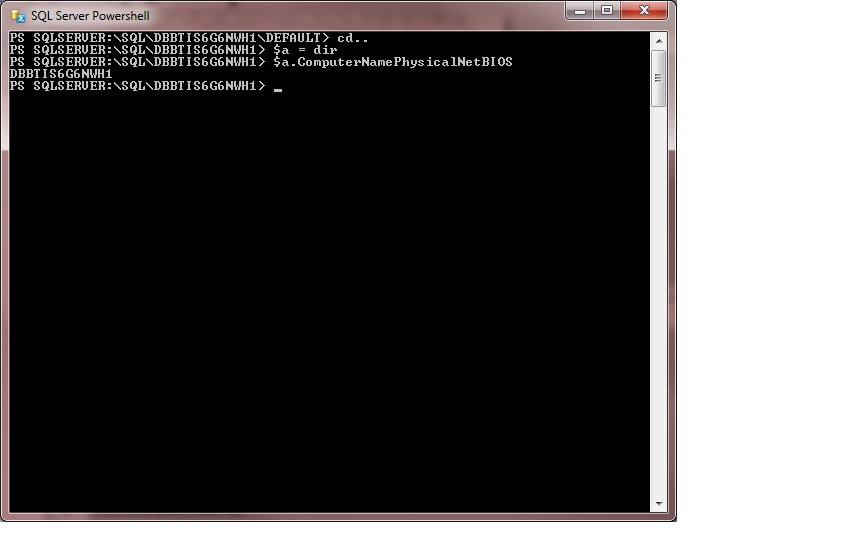

So I start at the DB tree in PS. If you run a ‘dir’ command you’ll get a list of DBs. Now, you loop through them, and for each one, you call invoke-sqlcmd and connect to that DB. Now you run the T-SQL inside the -query param. It’s that easy. Now you’ve got a repeatable method for running code against a whole list of DBs on any server and it’ll run whatever is in the -query param.

And if you’ve got code that’s more complicated, you can put it in a file and run it that way. It would look like this:

dir | %{invoke-sqlcmd -ServerInstance localhost -database $_ -InputFile c:\MySQLFile.sql}

With this you can deploy SPs or views, or tables, or whatever you like to every DB. And you can easily alter it to loop across servers too. So even if you’re a beginner you can use this method because it never changes and you can still get the full power out of PS. And this is the point isn’t it?

So many big PS guys completely miss the point that PS is supposed to make our lives easier. They insist that things have to be done a certain way and frankly I’m getting tired of all the little pissing contests that take place. Oh you have to do it this way because it performs better. Or you have to do it this way because it’s more elegant. And while I agree with those to a certain degree, there’s nothing more elegant than getting the job done and not having to spend all day doing it. I’ve had arguments with guys about scripting DB objects and they insist that the native SMO is faster than the way I do it even though mine is a lot less code. And I’ve tested it, and native SMO is faster. On one of my DBs that had several thousand objects the SMO came in like 15secs sooner than the shorter way. Wow, 15secs, really? What will I do with all that extra time? Maybe I can use it to finally write that book I’ve been planning on huh? So for most cases, these differences are just pissing contests that I have no use for.I don’t care if scripting an entire DB takes a few extra secs. I’ll spend far more time than that writing the SMO it takes to save that time.

And since a lot of our work is adhoc in nature, it helps us to be able to write short, tight code. And by adhoc I mean they’re requests that we couldn’t have forseen so we have to write something from scratch. I don’t know about you, but I don’t want my hands in raw SMO 4x a day. I’d rather write a few 1-liners and go to lunch.

So don’t let anyone tell you that the conduit method is worthless or even inferior. It’s just as valid a method as writing 2 pages of SMO to do the same thing, and it performs plenty fast enough. Don’t be afraid of PS. Use the conduit method until you can learn more PS and in the meantime you’ll be more productive than you’ve ever been.

And we’ve got lots of PS vids on MidnightDBA.com, so go check them out and we’ll explain all kinds of things to you.