Here’s a really good one. I noticed that very few of my boxes had a job to recycle the sql error logs. This is important because unless specifically done, the error log will only recycle when the service is burped in some way. For those of you who aren’t up on the lingo, recycling the error log is when you close down one log and create a new one. The error logs are just txt files kept on the server. So recycling creates a new physical txt file. And the reason you care about this is because some servers don’t get bounced very often at all and the files can get quite large. I have one server with a 40GB error log. And when I try to open it, it sits there for like 2hrs and then finally dies cause there aren’t enough system resources to deal with a file that big. So you can see why you want to keep the size of the files down.

And like I said, the error log only recycles when you burp the service or do it manually. And the way you do it manually is by running this code:

master.dbo.sp_cycle_errorlog

But doing it manually isn’t good enough because it has to be done on a regular basis. I prefer to recycle my logs every day. It’s a good generic schedule and honestly I don’t ever remember needing anything more granular. OK, so a new log every day is what we’re after. That means we need to put this code in a job and have it run every day at the same time.

So ok, that set the stage for what we’re wanting to do here. Now we can get into what we actually wanna talk about… and that’s how we push our new job to all of our boxes. Now, I’m going to do this a specific way and I’m doing it like this for a reason. I’ll explain once I’m done.

Here are the steps I want you to take to prep for this venture.

1. Create a job to occur every day (Midnight is preferable) and include the above code in the job step.

2. Be sure to set the job owner to sa.

3. Script the job and save it as a file somewhere.

4. You’ll have to script the job for different versions of SQL. SQL2K doesn’t have job schedules so the script blows up. So at least have one for SQL2k and below, and one for 2K5 and above.

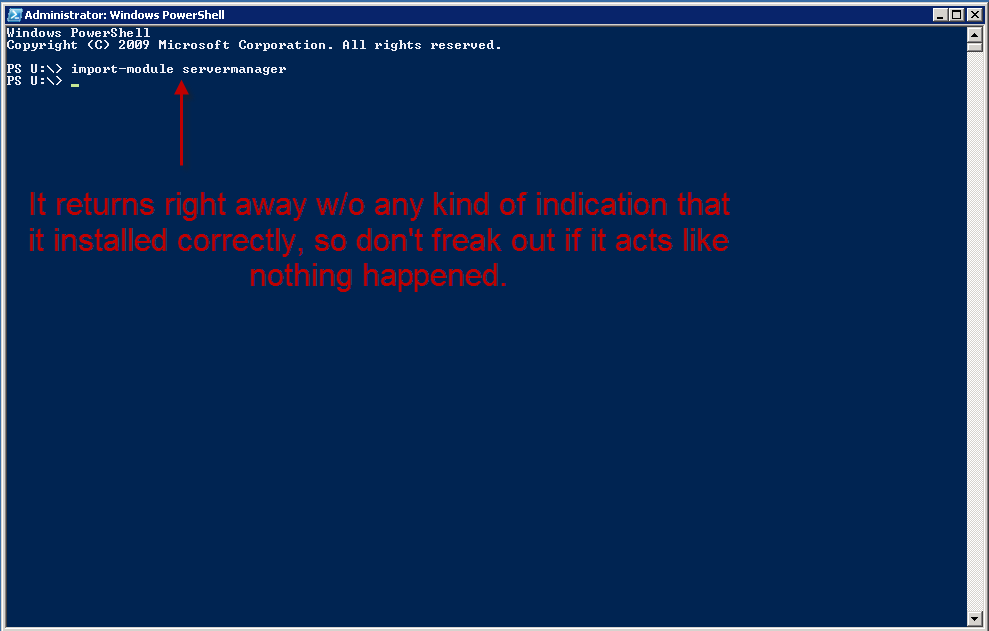

Now here’s the powershell script you’ll run.

$SourceSQLScript1 = “\\Server01\F$\SQLServerDBA\Scripts\Collector\SQLQueries\ServerListAllSQL2000.txt”;

$SqlCmd1 = Invoke-Sqlcmd -ServerInstance $Server -Database $StatsDB -inputfile $SourceSQLScript1

$SqlCmd1 | % {

[System.String]$ServerName = $_.ServerName;

$ServerName;

invoke-sqlcmd -ServerInstance $ServerName -Database “MSDB” -InputFile “\\Server01\F$\SQLServerDBA\Scripts\ServerConfig\RecycleSQLErrorLogJOB-SQL2K.sql” -SuppressProviderContextWarning

}

Now I’m going to explain what’s going on here and why:

1. $SourceSQLScript1 — This is the var that holds the location of the txt file where I store the query for the list of servers to run against. Inside the file looks like this: select ServerID as InstanceID, ServerName from dbo.Servers (nolock) where IsSQL = 1 and SQLVersion = 2000. The reason I do it this way is because if I ever need to change the query, all of the scripts that call the query will automatically pick up the change. So putting the query in a file is a good thing.

2. $SqlCmd1 — here’s where I’m actually running the query in the step above and setting the results to this var. Now I’ve got an array of ServerNames I can cycle through to run my script on.

3. [System.String]$ServerName = $_.ServerName; — Here I’m just setting the current cursor iteration of the ServerName equal to a var ($ServerName). It just makes it easier to read and if something comes up where I need to make a minor adjustment to how the ServerName looks, then I can mess with the var and not have to touch the rest of the script. Again, reducing effects to the script if I need to make changes to the value. So it’s like later on down the line if I discover that I need to put [] around it or something like that. This could also be done in the query file, but it’s nice to have the option here as well should I need something special for a single script.

4. $ServerName; — Here I’m just printing out the ServerName so I can watch my script cycle through the boxes. It’s my way of knowing what’s going on. I hate that blinking cursor.

5. invoke-sqlcmd — This is where the real magic happens. I’m connecting to each box and running the job script I saved above.

Here are some finer points of the logic for why I did things the way I did:

1. I put the server list query into a var. This is to allow you (or me) to get the server list any way we like w/o disturbing the rest of the query. You could have a hardcoded list of serverNames, or have them in a file… whatever you like. Here’s a really good example.

Instead of this:

$SqlCmd1 = Invoke-Sqlcmd -ServerInstance $Server -Database $StatsDB -inputfile $SourceSQLScript1

You can have this:

$SqlCmd1 = “Server1”, “Server2”, “Server3”, “Server4”, “Server5”

And the rest of the script stays the same because the cursor is run off of the var. It doesn’t care how that var gets populated.

2. I chose to run this separately for each edition of SQL. So I ran it once for SQL2K, once for SQL2K5, etc. That’s because like I said there are differences in the job scripts for the different versions. I could have done it all in a single script, but I really don’t like coding that logic in there and it increases the size of the script because now I have to check for the version of SQL and make a decision before I can create the job. I have to point it to the right .sql script. That’s too much work when all I have to do is change the query file and the script file between runs. And this way, I can run them concurrently for each version simply by saving the script under different names and kicking them off at the same time.

3. This one is my favorite because it’s what makes this so flexible. I chose to put the sql logic itself into a file and just call that file. The reason I did this is because not only is the sql logic too long for this type of script, but if I’ve got anything else I need to run against all my boxes, all I have to do is change the sql script I’m calling. I don’t have to make any changes at all to the rest of the script. So if I want to create or delete any specific users off of a group of boxes, all I have to do is put that code in a script and save it, and then point this script to it. Or anything else, right? This is really the bread and butter for powershell and multi-server mgmt.

So guys, take this boiler plate script and methodology and apply it to your own stuff. I’ve taken a lot of stuff out of the script for this blog because my scripts are part of a much larger solution I’ve developed and it’s not relevant to what you’ll be doing. But the script works as-is and you can add whatever you want to it. And now you’ve got a generic way to push T-SQL out to your boxes, and a generic way to populate the server list it goes against. What else do you need really?