Here’s the situation…

You get a call from one of your customers saying that the log has filled up on the DB and they can’t do anything any more. So you connect to the server and find out that the log backups haven’t been running. So you run the backup and everything is hunkydory. But why did it fail to run in the first place? Well about 3secs of investigation tells you that the Agent was turned off. Ok, you turn it back on and go on about your business. But this isn’t the way to do things. You don’t want your customers informing you of important conditions on your DB servers. And you certainly don’t want Agent to be turned off.

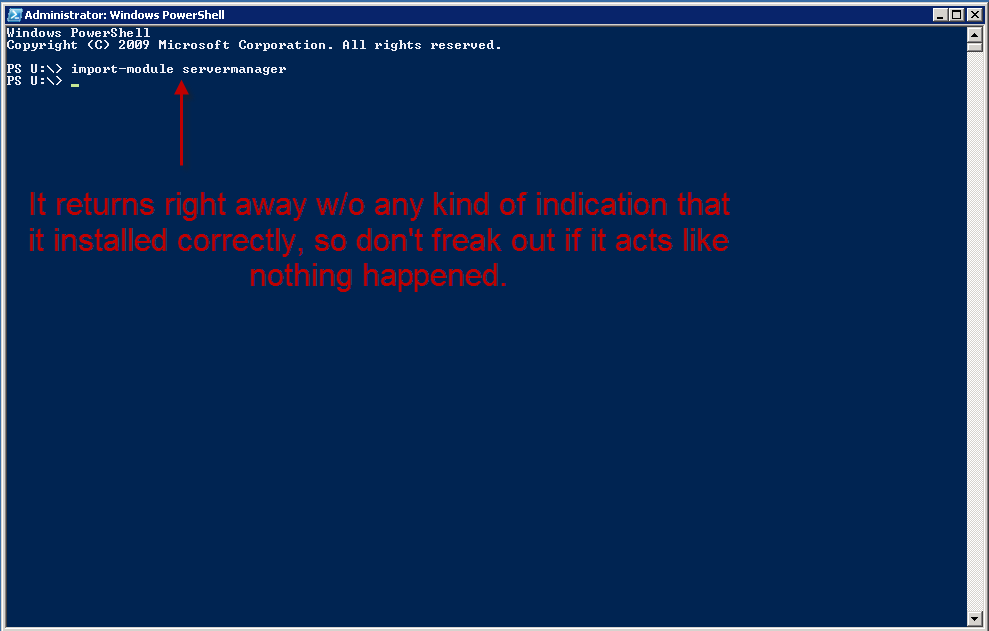

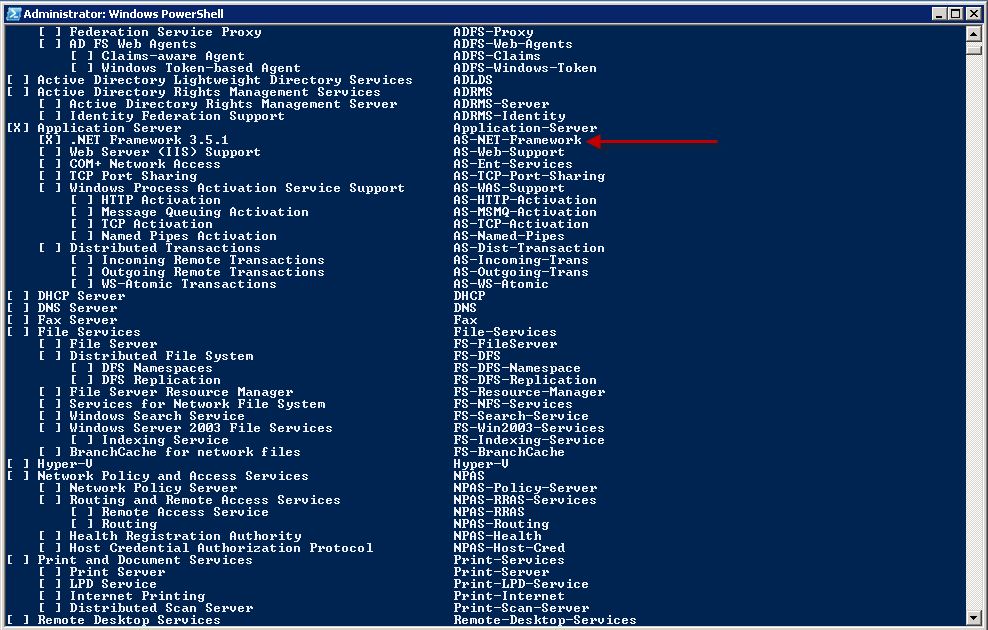

And while there may be some other ways to monitor whether services are running or not, I’m going to talk about how to do it in PS. There are 2 ways to do this in PS… get-service and get-wmiobject. Let’s take a look at each one to see how they compare.

In the old days (about 2yrs ago), when all we had was the antiquated powershell v.1, you had to use get-wmiobject for this task because get-service didn’t allow you to hit remote boxes. All that’s changed now so you can easily run get-service against a remote box with the -computername parameter.

get-service -computername Server2

And of course it supports a comma-separated list like this:

get-service -computername Server2, Server3

And just for completeness here’s how you would sort it, because by default they’re going to be sorted by DisplayName so services from both boxes will be inter-mingled.

get-service -computername Server2, Server3 | sort -property MachineName | FT MachineName, DisplayName, Status

Ok, that was more than just sorting wasn’t it? I added a format-table (FT) with the columns I wanted to see. You have to put the MachineName there so you know which box you’re gong against, right? And the status is whether it’s running or not.

Remember though that I said we were going to do SQL services, and not all the services. So we still have to limit the query to give us only SQL services. This too can be done in 2 ways:

get-service -computername Server2, Server3 -include “*sql*” | sort -property MachineName | FT MachineName, DisplayName, Status

get-service -computername Server2, Server3 | ?{$_.DisplayName -match “sql”} | sort -property MachineName | FT MachineName, DisplayName, Status

so here I’ve used the -include and the where-object(?). They’ll both give you the same results, only the -include will filter the results on the remote server and the where-object will filter them on the client. So ultimately the -include will be more efficient because you don’t have to send all that extra text across the wire only to throw it away.

And of course, you don’t have to use that inline list to go against several boxes. In fact, I don’t even recommend it because it doesn’t scale. For purposes of this discussion I’ll put the servers in a txt file on C:. Here’s how you would do the same thing while reading the servers from a txt file, only this time you could very conveniently have as many servers in there as you like. And when creating the file, just put each server on a new line like this:

Server2

Server3

So here’s the same line above with the txt file instead:

get-content C:\Servers.txt | %{get-service -computername $_ -include “*sql*” | sort -property MachineName | FT MachineName, DisplayName, Status}

This is well documented so I’m not going to explain the foreach(%) to you.

Ok, so let’s move on to the next method because I think I’ve said all I need to say about get-service. But isn’t this just gold?

get-wmiobject

Earlier I was talking about what we did in the old days and I always used to recommend get-wmiobject because of the server limitation imposed on get-service. However, does that mean that get-wmiobject is completely interchangable with get-service now? Unfortunately not. I’m going to go ahead and cut to the chase here and say that you’ll still wanna use get-wmiobject for this task most of the time… if not all of the time, because why change methods?

You’ll notice one key difference between doing a gm against these 2 methods:

get-service | gm

get-wmiobject win32_service | gm

The get-wmiobject has more methods and more properties.

And the key property we’re interested in here is the StartMode.

If you’re going to monitor for services to see which ones are stopped, it’s a good idea to know if they’re supposed to be stopped. Or even which ones are set to Manual when they should be set to Automatic.

And for this reason I highly recommend using getwmiobject instead of get-service.

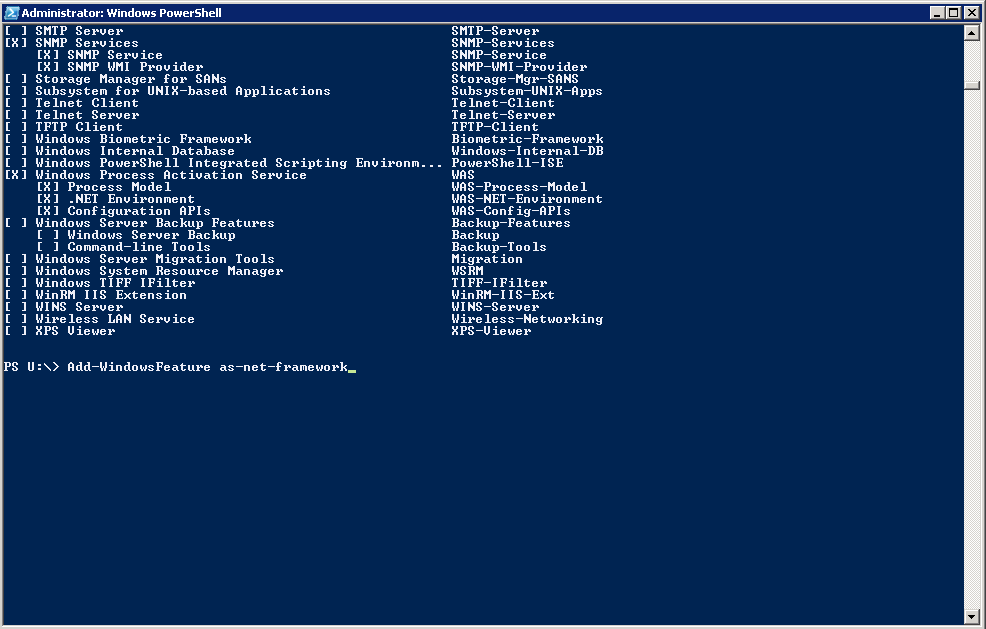

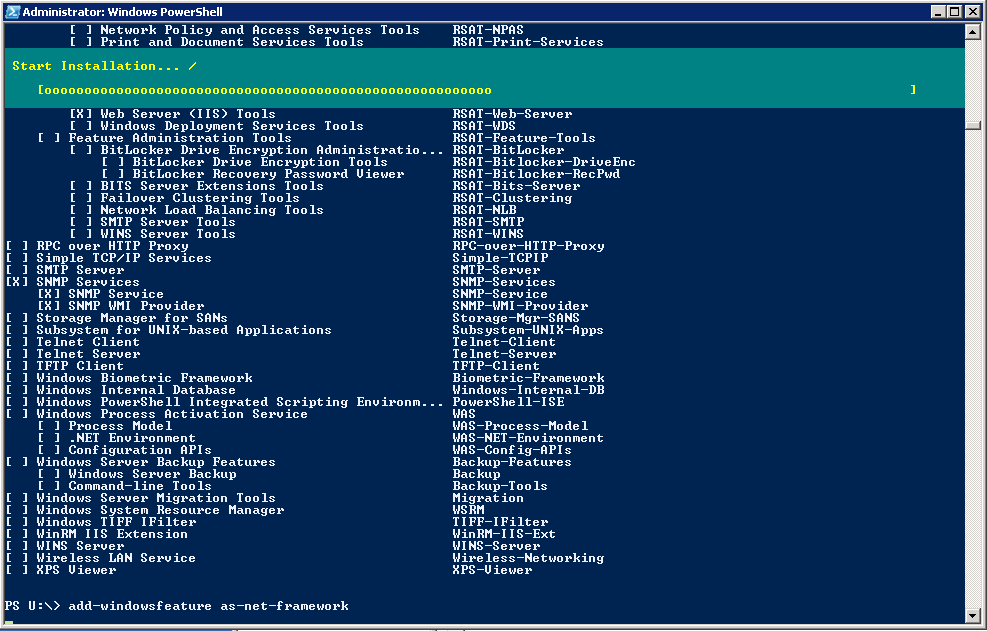

Here’s some sample code using the txt file again.

get-content C:\Servers.txt | %{get-wmiobject win32_service -computernatm $_ -filter “DisplayName like ‘%sql%’ “} | FT SystemName, DisplayName, State, StartMode -auto

Notice that the names of things change between methods too, so watch out for that. So like MachineName changes to SystemName. You’ll also notice that I didn’t provide you with a full working example of a complete script. That’ll be for another time perhaps. The script I use fits into an entire solution so it’s tough to give you just a single script w/o also giving you all the stuff that goes along with it. And that just gets out of the scope of a single blog post.

However, I’ll leave you with these parting pieces of advice when building your service monitor.

1. Instead of pulling the servers from a txt file, put them in a table somewhere so you can run all of your processes from that location.

2. Use get-wmiobject win32_service instead of get-service. It’s more flexible.

3. When you collect your data, just save it to a table somewhere instead of alerting on it right away. In other words, there should be a collection and a separate alerting mechanism.

*** Why you ask? Well I’m glad you asked, because not asking something that should be asked is like asking something that shouldn’t be asked but in reverse. Anyway though… I prefer to get a single alert on all my boxes at once instead of an alert for each box, or for each service. And that kind of grouping is much easier to do in T-SQL than in PS. Also, there may happen a time when a service is down for a reason and you don’t want to get alerts on it but you still want to get alerts on the rest of the environment. This is easier to do in T-SQL as well. And finally, you may want to also attempt to start the services that are down and that really should be a separate process so you can control it better. Or you may just want to be alerted and handle them manually. Again, what if the service is supposed to be down for some reason, you certainly don’t want the collection process going out and restarting it for you. And the collection can be a nice way to make sure you remember to turn the service back on when you’re done with whatever you were doing. You’ll get an alert saying it’s down, and you’ll be all like, oh y, I totally forgot to turn that back on and my backups aren’t kicking off. All the same, you really do want the collection, alerting, and action processes to be separated. But that’s just me, you do what you want. ***

4. Keep history of that status of the services. You can look back over the last few months and see which ones have given you the most trouble and you can then try to discover why. It’s good info to have and you may not realize how much time you’re spending on certain boxes until you see it written down like that.