Today we’re going to continue our exploration of the server-level in PS. You’ll remember before we’ve explored it a little bit when we talked about querying cluster nodes.

So today we’re going to kill users in a DB. This is incredibly easy to do in PS.

First, let’s take a look at the T-SQL counterpart. Pretty much everyone has a script like this.

DECLARE @currUser varchar(100),

@SQL nvarchar(200)

DECLARE Users CURSOR

FOR select DISTINCT spid from sys.sysprocesses

WHERE [program_name] LIKE '%businessobjects%'

OPEN Users

FETCH NEXT FROM Users INTO @currUser

WHILE (@@fetch_status <> -1)

BEGIN

SET @SQL = 'KILL ' + @currUser-- + ''

EXEC (@SQL)

--print @SQL

FETCH NEXT FROM Users INTO @currUser

END

CLOSE Users

DEALLOCATE Users

And of course, the problem is as it always is in T-SQL. It doesn’t scale to several boxes, and if you find yourself w/o your script, it’s not not really easy to reproduce off the top of your head unless you’re one of those freaks who can write cursors w/o looking at a map. And I don’t know about you, but I quite often find myself w/o my scripts, so I like things that are easier to reproduce.

And like I said, PS scales very well to multiple boxes. Why would you want to run it against more than one box you ask?

Well, let’s say you’ve got a single app server that hits multiple DB servers and you want to kill them all for a maint window. You can’t assume that stopping the service on that app server will kill the DB connections. In fact, it quite often doesn’t. There are other testing scenarios where this could be useful, but I’ll let all of you use it as you see fit. The point is it’s here if you need it.

So to do this in powershell:

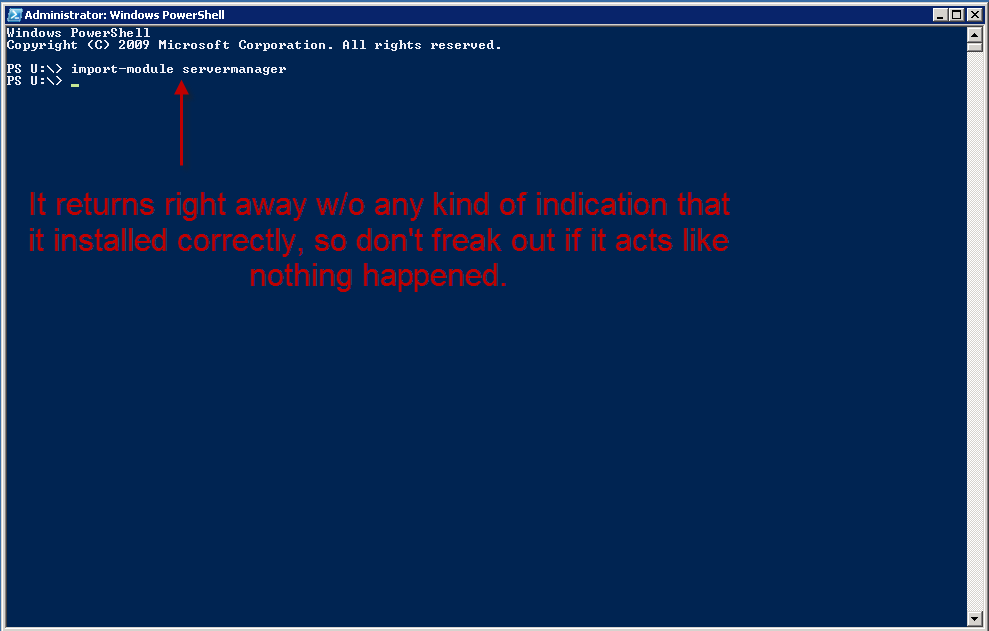

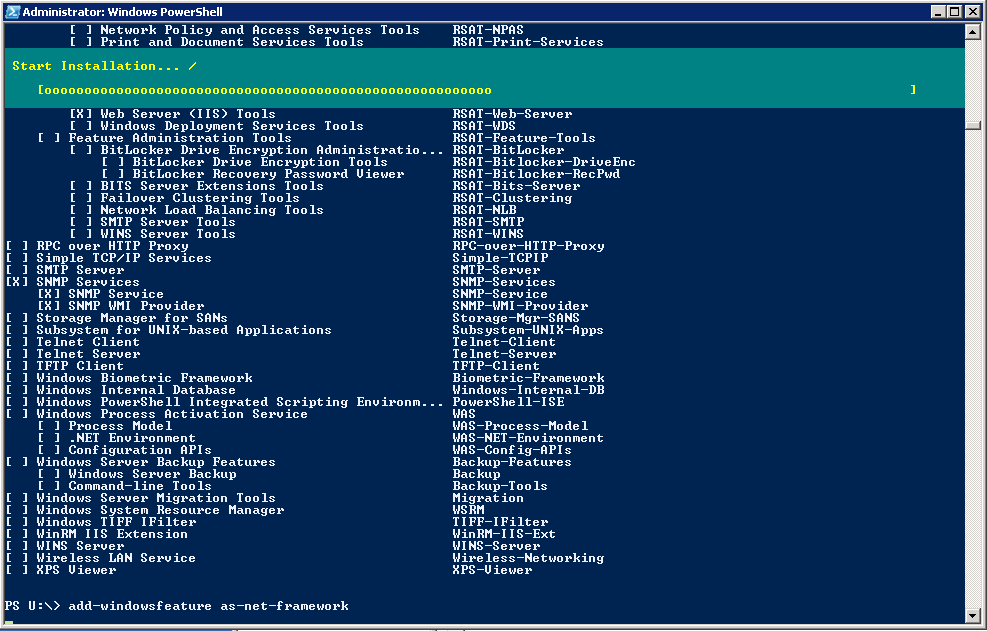

Start by connecting to a DB at the server level (either in sqlps or in PS proper… my examples will be in sqlps, but it really doesn’t matter as long as you connect).

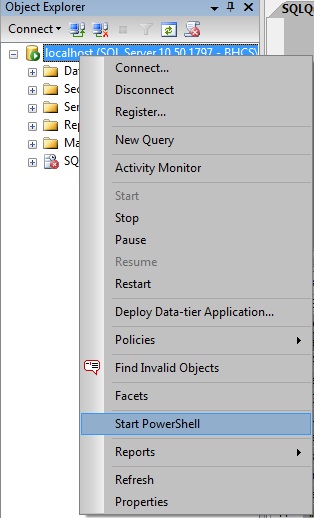

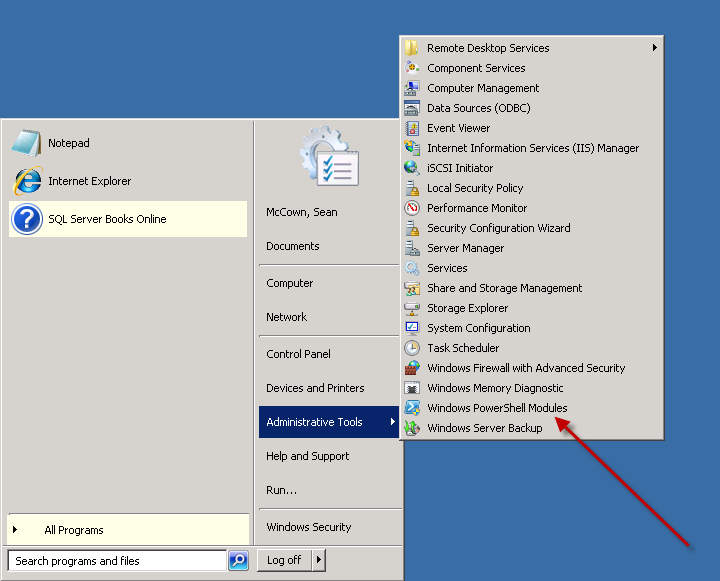

In sqlps you can do this by doing a right-click on the servername and going to ‘Start Powershell’.

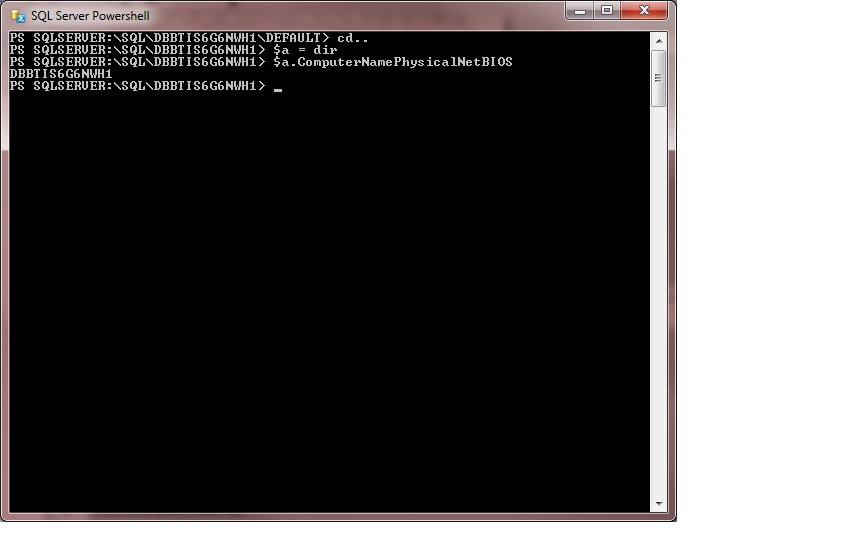

Then you just need to go up one level like this:

>cd..

Now you’re ready for the command.

So let’s say you want to drop all the spids connected to SeansDB. And as usual, there are 2 ways to do this. I’m going to show you both of them just for completeness.

Method 1:

dir | ?{$_.Name -eq "SeansDB"} | %{$_.KillAllProcesses("SeansDB")}

Now, those of you who know PS, know this is actually quite wasteful. What you’re doing is getting a list of all the DBs, then filtering it down to one and then running the method.

And of course since you call the method with the DB name to begin with this is actually useless. However, it’s still a legal method so I wanted to mention it.

Method 2:You’ve got to think about what you’re doing here so you can make the right decision. When you do this in sys.sysprocesses, you’re working at the server-level, so the only place individual DBs come into play is with the result set of whatever cursor you write to limit your results. And quite often killing all the spids in a DB can be very useful. So here’s a better way to go about it in PS.

(dir).KillAllProcesses("SeansDB")

This is easy to remember, and easy to type. And of course, it’s obvious what it does… it kills all the spids connected to SeansDB. Now, you can also kill just a specific spid like this:

(dir).KillProcess(69)

And that’s how PS can bring that long t-sql cursor down to almost nothing. What? What’s that? You want more? You want to be able to kill spids based off of application, or CPU, or some other criteria? Well, now you’re just being demanding, but I think PS can do something for you. This is just going to take an extra step and here’s what it’ll look like.

In our example here let’s kill spids by application. So we’ll kill all users who are connecting through SSMS.

$a = (dir).EnumProcesses()

$a | ?{$_.Program -match "SQL Server Management Studio"} | %{$_.KillProcess($_.SPID)

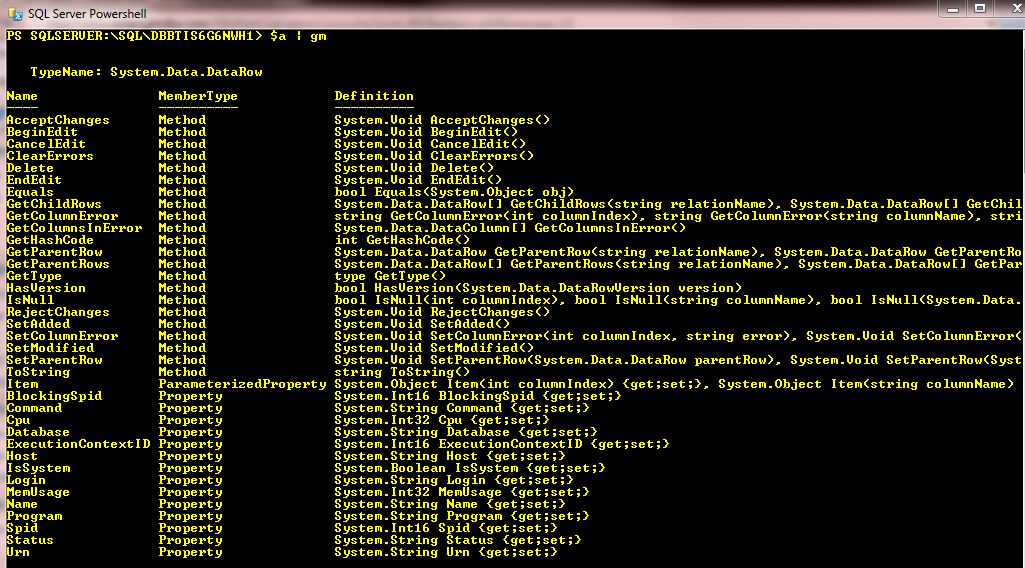

Now, there’s a treasure trove of stuff in here. Let’s take a look at this in detail, especially the EnumProcesses() method.

This method is overloaded so you’ve got some nice functionality built-in.

Here are the documented overloads:

$a = (dir).EnumProcesses() ## Gets all processes.

$a = (dir).EnumProcesses($False) ## Excludes system processes.

$a = (dir).EnumProcesses(69) ## Get info on a single spid... 69 in this case.

$a = (dir).EnumProcesses("domain\user") ## Gets processes run by a specified login.

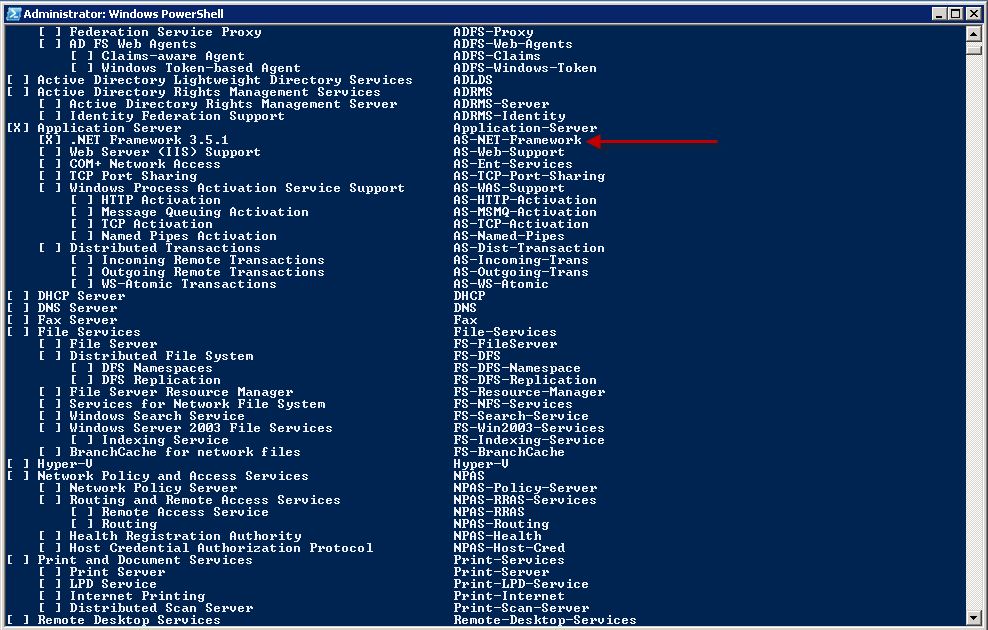

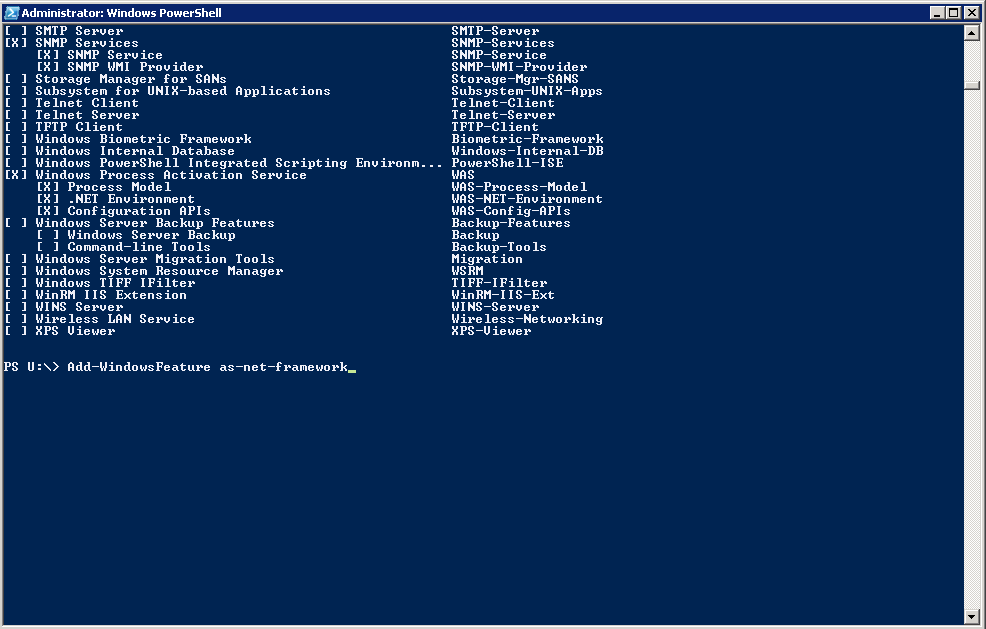

And now that you’ve got your list of processes, you can do a get-member on them to see what properties are available to you. So remember above when we killed spids by Program? Well, you can kill by anything returned as a property from your get-member. Here’s a screenshot of the results I got from mine.

Killing processes in powershell is so easy it’s almost makes me feel stupid for documenting it. And while you may be used to doing things like this in T-SQL, give this a try and I think you’ll find you like it much better once you get used to it.

And I mentioned that it scales to multiple boxes really well, and it does. I just didn’t show you that here cause it’s pretty easy to figure out.

And DO use this with care. It’s so much easier to kill everything on the box in PS than it is with T-SQL. And I’m not taking any responsibility for how you (mis)use this knowledge.